5 Steps to Successful Automation: AdTech Holding QA Team Insights

Delivery of a high-quality product and top-notch functionality is the main goal and the top priority of AdTech Holding. How do we achieve it again and again?

The key to success is automation on all development steps from testing to release.

This is how automation helps us to stay time-efficient and keep quality at the highest possible levels:

| Automated process | How it helps |

| Testing | Allows to perform all necessary tests quickly |

| Testing Environment Setup | Saves time on manual environment updates and tests |

| Results Delivery | Gives a transparent status of every task to all the involved team members |

However, it wasn’t always like this.

Mikhail Sidelnikov, Lead QA of the AdTech Product Team, shared how the team managed to build smooth automation within five steps — literally from scratch.

How It Started

Back in 2016, AdTech QA Team wasn’t even close to automation. In fact, the holding was far away from even having well-organized quality insurance processes.

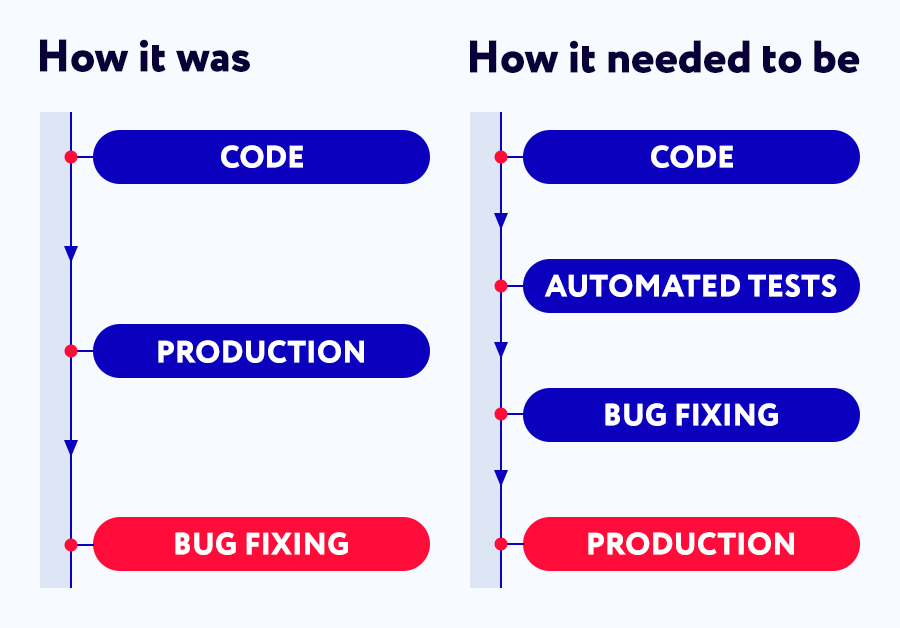

Mikhail Sidelnikov: Deploying a code to production without any elaborate testing was a typical practice. It worked out sometimes — but still implied too many reverted commits, with each of them meaning a potential loss of money.

To change this situation, the QA team came up with a plan for the new efficient process:

- Highlight the functional parts and modules where the team will create test cases for automated cases

- Create tests, internal libraries, and a particular infrastructure for quick and convenient testing

- Plan and implement a high-quality automated CI/CD process that will allow minimizing manual work

- Work through the test results delivery process to make everything transparent for every team member, from QA to Product Owners.

This accurate plan launched the beginning of the automation era — and the team began to put it into effect.

The Road to Automation

The new strategy idea produced five legitimate questions:

- 1. What should we cover with tests?

- 2. Which frameworks and programming languages should we use?

- 3. How should we use existing general practices?

- 4. When should we launch tests?

- 5. How to ensure transparency at every step?

A detailed answer to every question became a successful step in automating development and QA processes.

Creating the Right Tests

Mikhail Sidelnikov: The first and usually the most challenging question you must ask yourself when trying to build automation is, ‘What should we cover with tests?’

To answer this question, let’s first look at the two main components of the product (in our case, an ad network):

- Backend — several microservices written on PHP and GO

- Frontend — user accounts of publishers and advertisers

The most efficient way to understand what requires testing is to find out the most popular functionality. In other words, the question can be rephrased, like ‘Which features do customers use most of all?’

How to find the answer to this? There are several options available:

- Ask team members, product managers, and product owners, and thus get some idea of which functionality has priority. However, such information is usually so vague that doesn’t give any particular understanding.

- Check statistics and various metrics if they are collected at all.

- Look at the overall demand: what are the most frequent requests in the system?

The last one turned out to be the most convenient solution: all requests were accurately collected and tracked, and, thanks to this practice, QA engineers could have immediate feedback on what tests should be run first.

Choosing Frameworks and Language

Again, there were several ways how to choose the programming language for building automation. Here they are:

- Stick to the same language used for writing the code.

Mikhail Sidelnikov: Many consider this one as having a seemingly big advantage: developers can easily correct and create new tests themselves. However, I suppose it’s not the most reasonable argument. In fact, developers rarely work with QA tests — while QA engineers need to struggle with the language they are not used to.

- Use a language that is familiar and convenient for the QA Team.

Mikhail Sidelnikov: It’s much easier to help developers learn how QA tests work — even if they are written in a different language. So, we decided to go this way.

The AdTech Holding QA Team chose JAVA: the whole QA team had vast experience in writing JAVA codes and knew how to create infrastructures for this language. Besides, thanks to frameworks, JAVA has many out-of-the-box features: for example, a very simple and still efficient test parallelization by JUnit.

As a result, the team settled on the following stack:

- Maven – for project building

- Java – as the programming language

- JUnit 5 – as a framework for creating tests and easy parallel testing

- Selenide – for web tests as a ready solution

- Aerocube: selenoid + ggr – for automating work with infrastructure

- TeamCity – as a tool for launching tests, familiar and convenient for all team members

- Allure (and later Allure TestOps )- for keeping manual test cases and results

- Docker – for various infrastructure tasks related to the environment

- GitHub – for keeping the code, available for all company members

Mikhail Sidelnikov: This list proved itself to be efficient at all our projects — it works flawlessly, no matter if you have 50, 5000, or more tests.

Sharing Solutions

The overall development system at AdTech Holding consists of many different projects — but all these projects mainly use the same database.

However, there is no need to create a specific integration with a common database for every project. Instead, it’s possible to make a so-called wrapper — a block of code that can be used by multiple teams and their members.

How to do this task effectively?

To realize this solution, we implemented Artifactory. It has a very simple integration with Maven:

- 1. Set up a free Artifactory instance for storing Java/Maven artifacts.

- 2. Configure settings.xml on all computers and servers where the tests will be launched.

- 3. Use settings in pom.xml or your build system plugins to deploy artifacts to the storage. At AdTech, we use TeamCity.

And this is how the automated library deployment looks:

- 1. A change is made in a repository with a common code

- 2. A build system tracks the changes made in this repository

- 3. When merging the library code into master branch, unit tests are launched, and the library is deployed to Arfitactory.

As a result, every team member can get access to the new version and use it. And all of us can use and maintain these libraries easily.

Launching Tests

To be more precise, the question is “What’s the right time to launch tests”?

Mikhail Sidelnikov: There are plenty of options, including nightly regression testing and manual tests at master after release — but this was not the approach we were looking for. What we needed was quick and high-quality CI/CD.

This is how the process was finally organized:

- All planned tasks are decomposed into smaller parts. These parts can be performed in parallel with each other.

- Microservice infrastructure allows making slight changes in particular components that are easier to check. It minimizes a chance of a mistake.

- Then, the QA team launches high-quality testing of every change.

Thanks to this set of practices, we at AdTech Holding have a very fast development process, with up to 50 deployments to production per day. What is important, most of these deployments are not just quick bug fixes — but major tasks.

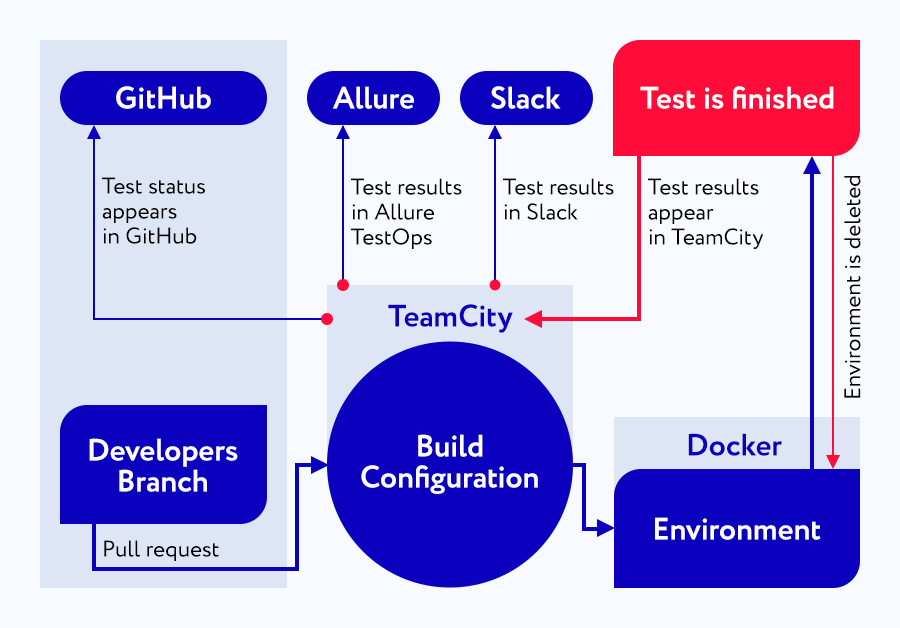

The main concern of exactly the QA team was the last process — the one related actually to testing — and also its integration into the overall development workflow. The final solution looks the following:

- 1. The development is processed in a separate repository branch. When a pull request is created, the build configuration is launched at TeamCity.

- 2. There can be several builds, including unit tests, tool checkers, and regular QA tests.

- 3. For backend QA tests, the required environment is automatically created with Docker. After the tests are finished, the environment is also automatically deleted, which saves much time.

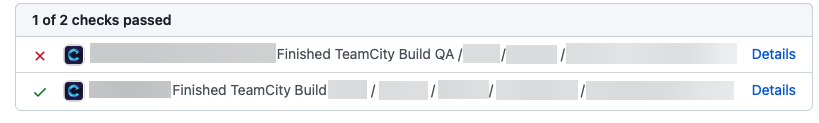

- 4. After a test run, GitHub receives an alert from Teamcity that shows a status of a build configuration in the Checks section. All the details are available at Teamcity, and you can reach them right from the Checks.

- 5. Such tests are performed for every added commit in a pull request.

Mikhail Sidelnikov: It doesn’t take much time to run tests so often with this workflow. About 800 tests for one of the services take approximately 7-8 minutes to complete. This time includes creating environments from about 20 Docker containers — and it’s not too long for the full regression testing cycle.

Transparency

Finally, transparency: one of the key factors of successful automated testing.

In this article, we will highlight the main principles of transparency. You can read more about it and why it is so important in Quality Assurance in this interview.

Transparency implies timely delivery of the status of each test to every process member. And this is how we do it at AdTech Holding:

Stage 1. The status of the test automatically appears on Github. All that was left for the QA team is to set the build feature in Teamcity:

Stage 2. All test results are available at Allure/Allure TestOps: it’s possible to check the status of every component and the whole testing history.

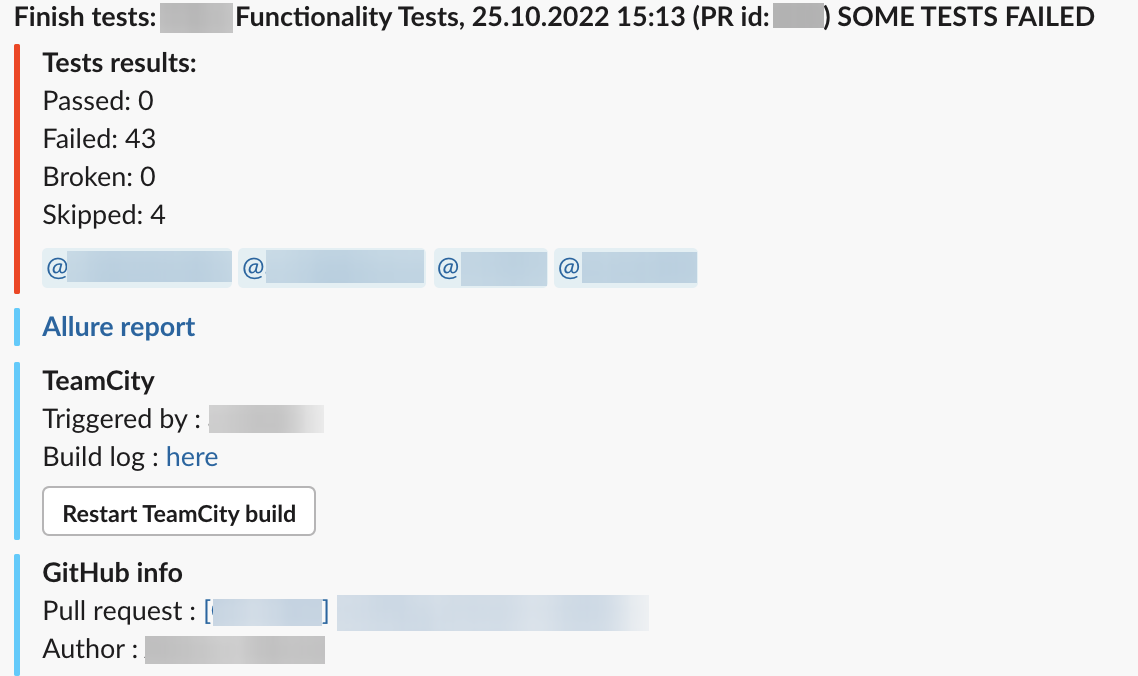

Stage 3. In-house solutions for Slack notifications. Despite having plenty of ready options, AdTech Holding created its own notifications systems working the exact way our teams need. At the moment, they look like this, but we are constantly updating and upgrading them:

Everyone has quick access to test results, links to reports and builds in TeamCity, Pull Request data, and even a button to automatically relaunch builds. All blocks are configurable – if you do not need any – just remove them in the build configuration parameters. And all of this — in a single Slack app.

Summary

This article showed that building high-quality, quick, and efficient automation doesn’t require much time and effort. All the processes that we shared in this article prove their effectiveness at AdTech — and we see no reason why they can not be adopted by other businesses.